Researchers use reliability analysis to verify that a measurement instrument produces consistent and stable results. When a scale or test lacks consistency, statistical conclusions lose credibility. Survey instruments, behavioral checklists, and composite indices all require reliability evaluation before interpretation. Many datasets prepared for formal SPSS data analysis include at least one reliability checkpoint during preprocessing.

SPSS provides multiple reliability procedures that match different research designs, item formats, and rating structures. Each method evaluates a specific dimension of consistency, so method selection should follow the measurement design rather than convenience. This guide explains the major reliability approaches, their correct use cases, execution steps, and reporting standards.

Cronbach Alpha Reliability in SPSS

Cronbach alpha measures internal consistency for multi-item scales that use Likert or continuous response formats. Researchers apply this method when several items target one latent construct such as attitude, perception, or satisfaction. Proper item coding and scale direction must stay consistent, especially in Likert instruments used in studies that analyze ordinal response patterns such as those in Likert scale data in SPSS.

Run the procedure through Analyze → Scale → Reliability Analysis. Place all scale items in the item box, select the alpha model, and request item diagnostics. Review corrected item–total correlations and alpha if item deleted values to detect misfitting items. Decisions should follow construct logic, not statistics alone.

Common interpretation thresholds place acceptable consistency near 0.70, good consistency above 0.80, and very strong consistency above 0.90. Reports should include item count, sample size, and any item removal decisions.

Test–Retest Reliability in SPSS

Test–retest reliability evaluates score stability across time using repeated measurement on the same respondents. This design appears frequently in longitudinal and structured observational frameworks, including designs classified as a cross sectional study with repeated instrument administration.

Create paired variables for both time points and compute a Pearson or Spearman correlation using the bivariate correlation procedure. A high positive coefficient indicates temporal stability. The retest interval must balance recall control with construct stability. Very short gaps inflate agreement, while very long gaps introduce real change.

Reports should state the coefficient, time interval, and sample size.

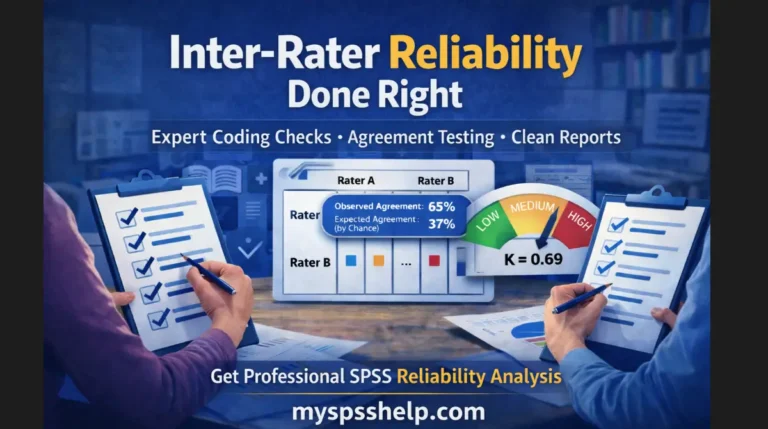

Inter-Rater Reliability in SPSS

Inter-rater reliability measures agreement across different raters who score the same subjects. Coding projects, clinical ratings, and behavioral observations rely on this statistic. Many applied survey coding tasks later move into broader survey data analysis pipelines after agreement checks.

For categorical ratings, compute Cohen kappa using the crosstabs statistics option. For continuous ratings from multiple raters, compute an intraclass correlation coefficient using the reliability analysis dialog with ICC selected. Model choice depends on whether raters represent a fixed panel or a random sample and whether analysis targets single or mean ratings. Reports must specify the ICC model explicitly.

Intra-Rater Reliability in SPSS

Intra-rater reliability evaluates scoring consistency from the same rater across separate occasions. This method detects personal scoring drift and rubric instability. Store repeated ratings in paired columns and compute correlation or ICC for continuous data, or kappa for categorical data. The same computational path used in Pearson correlation in SPSS applies when ratings follow interval assumptions.

High agreement reflects consistent rule application. Low agreement signals unclear scoring rules or insufficient rater calibration. Documentation should include the scoring interval and rating conditions.

Parallel and Alternate Forms Reliability in SPSS

Parallel and alternate forms reliability evaluates consistency between two equivalent versions of an instrument. Each form measures the same construct using matched but nonidentical items. Instrument developers often apply this strategy during structured questionnaire design phases similar to those used in academic surveys.

Collect total scores from both forms and compute a bivariate correlation. Strong correlation indicates equivalent measurement performance. Form balance in content coverage, difficulty, and length directly affects the coefficient.

Reports should describe form construction logic and present the correlation value.

Split-Half Reliability in SPSS

Split-half reliability estimates internal consistency by dividing a scale into two halves and correlating the part scores. SPSS computes this statistic within the reliability analysis procedure when you request split-half output. The software also applies the Spearman–Brown correction to estimate full-scale reliability.

Odd–even splitting usually produces balanced halves. Very short scales produce unstable half estimates, so longer instruments yield more dependable coefficients. Output should include the corrected split-half value and the splitting rule.

Kuder–Richardson Reliability KR-20 and KR-21 in SPSS

KR-20 and KR-21 measure internal consistency for dichotomous items scored as right or wrong. Binary coded knowledge tests and screening tools often use this approach. Cronbach alpha computed on 0–1 coded items equals KR-20 mathematically, so the standard reliability dialog produces the needed coefficient.

KR-20 uses item difficulty proportions and produces more precise estimates than KR-21, which assumes equal difficulty across items. Reports should confirm binary coding and state the coefficient clearly.

Omega Reliability in SPSS

Omega provides a model-based reliability estimate that handles unequal factor loadings better than alpha. Factor analytic workflows supply the loadings needed for omega calculation. Researchers often obtain these loadings through procedures such as exploratory or confirmatory factor analysis, including models built in confirmatory factor analysis in SPSS.

SPSS menus do not compute omega directly, so calculation requires extracted loadings and error variances. Omega performs well when a dominant general factor exists alongside unequal item contributions.

Reports should document the loading source and calculation method.

Composite Reliability in SPSS

Composite reliability appears in structural equation modeling and confirmatory factor models. The statistic uses standardized loadings and measurement error terms from the fitted model. SEM workflows that estimate latent constructs rely on composite reliability alongside convergent validity metrics.

Researchers extract loadings and error variances from the measurement model output and compute the coefficient using the composite reliability formula. Reports should show coefficient values and measurement model context.

Choosing the Correct Reliability Method

Reliability method choice should match the measurement structure:

- Internal consistency methods for multi-item scales

- Stability methods for repeated measurements

- Agreement methods for rater scoring

- Equivalent form methods for alternate versions

- Model-based methods for latent factor models