Designing the best survey questions for accurate results is critical—especially for PhD-level research where the stakes for data integrity are high. The quality of your survey data is only as good as the questions you ask. Whether you’re conducting academic research, organizational assessments, or product evaluations, poorly phrased questions can distort findings and lead to unreliable conclusions. For PhD researchers, this risk is even more critical, as data validity underpins the credibility of the entire study. In this article, we explore the anatomy of well-crafted survey questions, share real examples, and discuss advanced considerations for designing instruments that produce valid, reliable, and replicable results.

The Foundation of Good Survey Questions

Well-designed survey questions are clear, unbiased, and aligned with research objectives. They focus on a single concept, avoid emotionally charged language, and are framed for the respondent’s knowledge level. A well-written item consistently produces accurate responses and reflects the intended construct. Poor question design can introduce bias or ambiguity and reduce the reliability of collected data.

Types of Survey Questions and When to Use Them

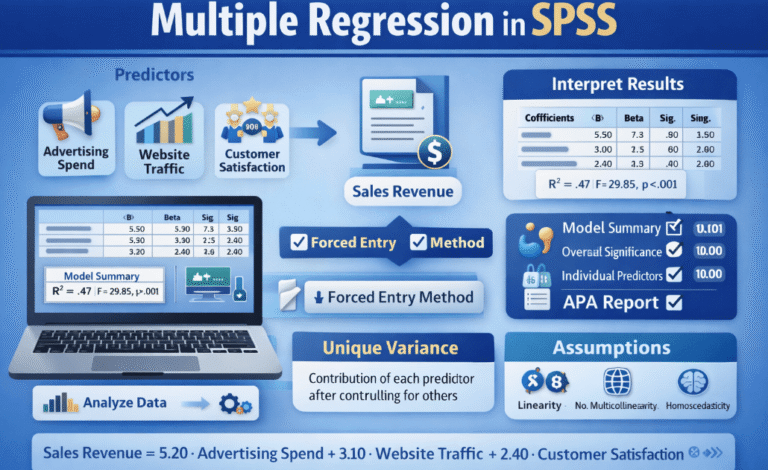

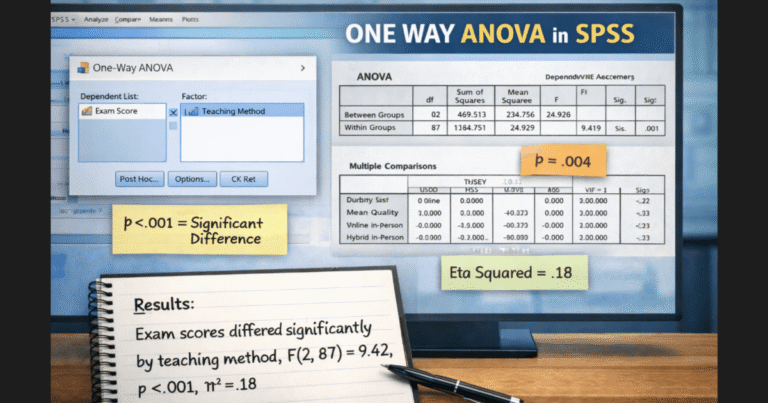

Closed-ended questions, such as multiple choice or Likert scales, are excellent for quantifying opinions or behaviors. For example, asking “How satisfied are you with your supervisor’s communication?” with response options ranging from “Very satisfied” to “Very dissatisfied” allows you to apply statistical methods easily. These questions are especially useful for trend analysis and regression modeling.

Open-ended questions give respondents the freedom to express their thoughts. A question like “What changes would you suggest to improve the onboarding process?” can reveal insights not captured through structured options. This format is best suited for qualitative analysis, especially during exploratory research phases.

Demographic questions categorize participants by variables like age, education level, or location. Asking, “What is your highest level of education completed?” helps you segment responses for subgroup analysis or reporting.

Semantic differential scales measure attitudes or perceptions across a continuum. A question such as “How would you rate the usability of the platform?” using a scale from “Easy” to “Difficult” provides a nuanced view of user experience.

Ranking questions let respondents prioritize items. For instance, asking them to rank mobile phone features like battery life, camera, and price helps in understanding what matters most. These are ideal for preference analysis and marketing research.

Advanced Considerations in PhD-Level Research

Operationalizing abstract constructs is key. Instead of asking vague questions about “organizational commitment,” break it into dimensions such as affective, continuance, and normative components using validated items.

Using validated scales improves construct validity. Consider widely accepted instruments like the Maslach Burnout Inventory or the Big Five Personality Traits when appropriate. These tools increase the scientific rigor of your study.

Before launching your survey, pilot testing helps identify flaws in item wording or layout. Cognitive interviews with sample participants can reveal issues like confusion, fatigue, or misinterpretation. Adjusting based on this feedback improves data quality.

Biases can threaten validity. To mitigate acquiescence bias, alternate positive and negative items. For social desirability bias, use neutral phrasing and ensure anonymity. Randomizing item order helps reduce priming effects that might skew results.

Examples of Well-Written vs. Poorly Written Questions

A poorly framed question like “Don’t you agree the company treats employees unfairly?” is both leading and emotionally loaded. Instead, a neutral version such as “How fairly do you feel the company treats employees?” invites unbiased responses.

Double-barreled questions combine two ideas, like “How often do you eat fast food and exercise?” Instead, split them into two: “How many times per week do you eat fast food?” and “How many times per week do you exercise?” This provides clearer data.

Tailoring Questions for Different Populations

Questions must be customized based on the audience. For international respondents, avoid idioms or culturally specific terms. When surveying ESL participants, use simple language and avoid jargon. For age-specific surveys, ensure that the content is relatable and clearly understood by the target group.

Analyzing Responses for Accuracy

Once data collection is complete, check for internal consistency. Reverse-worded items can help identify inattentive respondents. Examine patterns of missing data—if certain questions have high non-response rates, they may be unclear. Outlier responses should also be investigated, as they may indicate mistakes or misinterpretation.

Final Thoughts

Accurate survey data begins with precise and purposeful question design. For PhD researchers, each survey item must reflect the theoretical framework and research objectives. Thoughtful design enhances reliability, validity, and ultimately the credibility of your study.

If you need support in designing or refining your survey instrument, contact our experts or check our pricing page to learn more about our academic support services. For further reading, explore our guide on how to analyze survey data or review our comprehensive breakdown of survey distribution methods. For professional standards, refer to the American Association for Public Opinion Research (AAPOR).