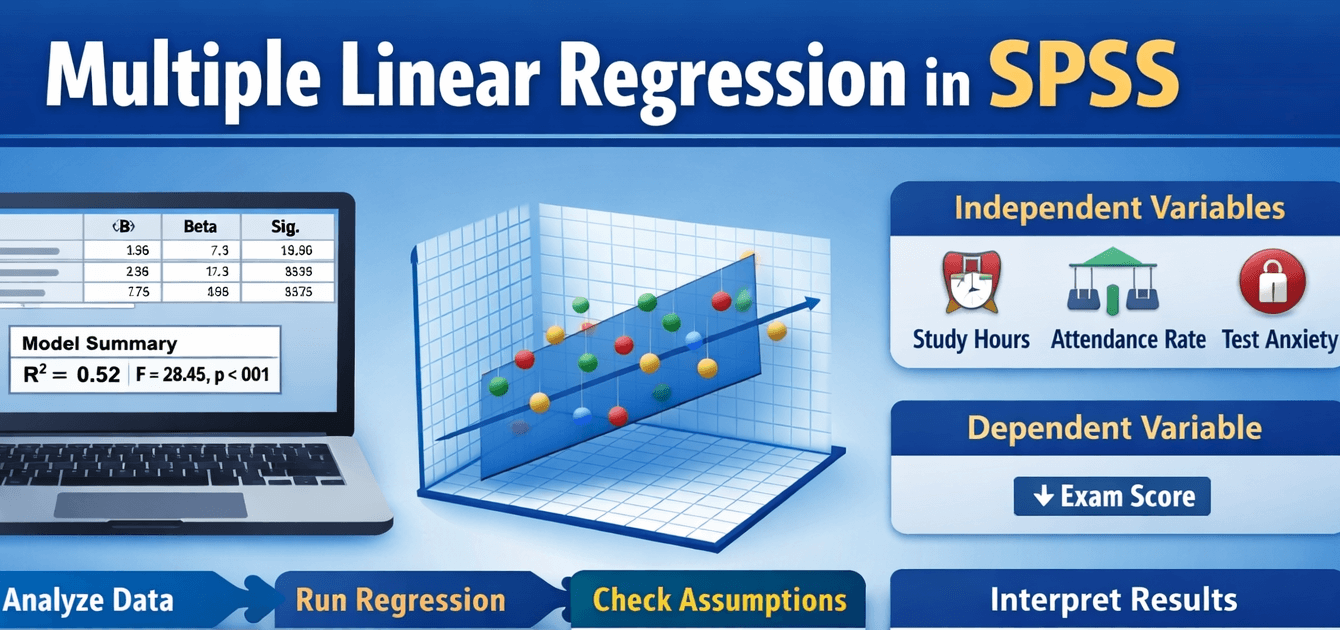

Multiple linear regression in SPSS is a statistical technique used to examine how two or more independent variables predict a single continuous dependent variable. Multiple linear regression allows you to evaluate how several predictors work together. This is Unlike simple linear regression which only tests one predictor at a time. This makes it an essential tool in academic research, business analytics, psychology, healthcare, social sciences and many other fields.

In practical terms, multiple linear regression helps answer questions such as:

“Do study hours, attendance, and motivation together predict exam performance?”

or

“Do price, advertising spend, and brand awareness predict sales revenue?”

SPSS makes this analysis accessible by providing clear menus, automated output tables, and statistical tests. However, understanding how to run a multiple linear regression in SPSS correctly and how to interpret multiple linear regression results in SPSS is essential to ensure valid conclusions. Errors in interpretation or assumption checking can easily lead to misleading findings.

In this guide, you will learn what multiple linear regression is, how to perform it in SPSS, how to interpret the results, how to present output in APA format, and the key assumptions of multiple linear regression in SPSS. By the end, you will be confident applying this method in real-world research.

How to Run a Multiple Linear Regression in SPSS

To demonstrate, assume we want to predict Exam Score (DV) using three predictors:

- Study Hours

- Attendance Rate

- Test Anxiety

Step-by-Step Procedure

- Open your dataset in SPSS.

- Click Analyze on the top menu.

- Select Regression → Linear…

- Move your dependent variable (e.g., Exam Score) into the Dependent box.

- Move your independent variables (Study Hours, Attendance Rate, Test Anxiety) into the Independent(s) box.

- Under Statistics, select:

- Estimates

- Model fit

- Confidence intervals

- Descriptives (optional)

- Click Continue.

- Click OK to run the analysis.

SPSS will generate output including the Model Summary, ANOVA table, and Coefficients table. These tables show statistical significance, model strength, and the contribution of each predictor.

Sample APA-Style Multiple Linear Regression Output

Below is a simplified example of an APA-formatted regression table based on hypothetical data.

Multiple Linear Regression Predicting Exam Scores

| Predictor | B | SE B | Beta | t | p |

|---|---|---|---|---|---|

| Study Hours | 2.45 | 0.60 | .42 | 4.08 | <.001 |

| Attendance Rate | 0.35 | 0.14 | .28 | 2.50 | .014 |

| Test Anxiety | -1.80 | 0.55 | -.31 | -3.27 | .002 |

Note. R² = .48, Adjusted R² = .46, F(3, 116) = 36.82, p < .001.

How to Interpret Multiple Linear Regression Results in SPSS

Here is how to interpret multiple linear regression results in SPSS in academic style:

A multiple linear regression was conducted to examine whether study hours, attendance rate, and test anxiety predicted exam performance. The model was statistically significant, F(3,116) = 36.82, p < .001, explaining 48% of the variance in exam scores (Adjusted R² = .46).

Study hours were a significant positive predictor of exam scores (B = 2.45, p < .001), indicating that each additional hour of study was associated with a 2.45-point increase in exam performance. Attendance rate also significantly predicted exam scores (B = 0.35, p = .014), meaning higher attendance was associated with better outcomes. Test anxiety was a significant negative predictor (B = -1.80, p = .002); higher anxiety was associated with lower exam performance.

Assumptions of Multiple Linear Regression in SPSS

1. Linearity

The relationship between predictors and the dependent variable must be linear.

Checked using scatterplots.

2. Independence of Errors

Residuals should be independent.

Assessed via the Durbin-Watson statistic.

3. Homoscedasticity

Residuals should have equal variance across levels of predictors.

Checked using scatterplot of standardized residuals.

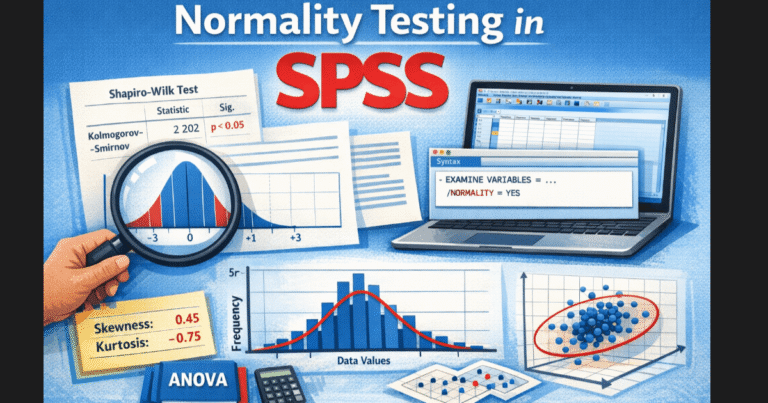

4. Normality of Residuals

Residuals should be approximately normally distributed.

Checked using histograms or Q-Q plots.

5. No Multicollinearity

Predictors should not be too highly correlated.

Checked using VIF and Tolerance.

When Is Multiple Linear Regression in SPSS Appropriate ?

Multiple linear regression in SPSS is appropriate when your goal is to predict or explain a single continuous dependent variable using two or more independent variables. It is best suited for situations where the outcome is numeric, such as test scores, income level, satisfaction ratings, reaction time, blood pressure, or sales revenue. Researchers use multiple linear regression when they want to understand how several factors together influence an outcome, and to determine which predictors make the strongest contribution.

Multiple linear regression is particularly appropriate when:

- Your dependent variable is continuous (interval or ratio scale)

- You have two or more predictor variables

- Predictors may be continuous or categorical (if coded correctly, e.g., dummy coding)

- You want to examine the combined effect of predictors

- You want to test the unique contribution of each predictor while controlling for others

- Relationships between variables are expected to be linear

- Your sample size is adequate for regression analysis

For example, it is appropriate to use multiple linear regression in SPSS when predicting exam performance from study hours, attendance rate, and anxiety level, or when analysing which demographic and behavioural factors predict customer spending.

When Is Multiple Linear Regression in SPSS NOT Appropriate ?

There are times when multiple linear regression is not appropriate, and using it may lead to misleading or invalid results. Multiple regression should not be used when the dependent variable is categorical (such as pass/fail, yes/no, treatment/control). In those cases, logistic regression is a more suitable method. It is also not appropriate when you have only one predictor variable, as simple linear regression would be the correct technique.

Multiple linear regression is also inappropriate when the sample size is too small relative to the number of predictors, when assumptions such as linearity or normality are severely violated, or when predictors are extremely highly correlated (multicollinearity). Additionally, regression analysis is not a substitute for experimental design and cannot prove causation unless the data come from a properly controlled study.

Common Mistakes to Avoid

Many researchers make avoidable errors when running multiple linear regression in SPSS. These include:

- Using categorical predictors without proper coding

- Ignoring multicollinearity

- Reporting only significance without effect sizes

- Misinterpreting Beta vs B coefficients

- Using regression on small sample sizes

- Not testing assumptions

- Overfitting the model

- Treating correlation as causation