Inter-rater reliability measures how consistently different raters or observers score the same subjects, behaviors, or responses. When two or more people evaluate the same data, their ratings should align if the scoring rules and measurement framework work properly. Strong agreement across raters increases confidence in results, conclusions, and downstream statistical analysis.

Researchers across psychology, education, health sciences, and survey research rely on inter-rater reliability to validate observational and coded data. Many projects move from structured instruments into full survey data analysis workflows only after raters demonstrate acceptable agreement. Without that checkpoint, measurement error can dominate results.

This guide explains the inter-rater reliability definition, how it works, how researchers calculate it, and how Cohen’s kappa supports agreement measurement in real studies.

Inter-Rater Reliability Definition and Meaning

Inter-rater reliability describes the degree of agreement among independent raters who evaluate the same phenomenon using the same scoring system and forms one part of the broader reliability framework used in statistical measurement, including other methods explained in this guide to reliability in SPSS. Each rater applies the same rubric, checklist, or coding scheme to identical material. Agreement indicates that the scoring framework produces stable judgments across people, not just within one evaluator.

The inter-rater reliability meaning centers on consistency across observers. If three trained coders review interview transcripts and assign similar category labels, the coding system demonstrates strong inter-rater reliability. If their labels differ widely, the system shows weak reliability and needs refinement.

In research design, this concept answers a practical question: Do different qualified people reach the same scoring decision when they follow the same rules?

Fields that frequently use multi-rater scoring include:

- Behavioral observation

- Clinical diagnosis rating

- Content analysis

- Educational assessment

- Structured survey coding

- Qualitative-to-quantitative data conversion

Many academic instruments described in structured academic surveys later require coder agreement checks before researchers publish results.

Inter-Rater Reliability in Psychology and Behavioral Research

Inter-rater reliability in psychology plays a central role in observational and diagnostic measurement. Psychologists often rate symptoms, behaviors, or responses using defined criteria. Multiple clinicians or researchers score the same case material to confirm scoring stability.

For example, two clinicians might rate therapy session recordings using a behavioral checklist. If both clinicians assign similar scores across most items, the checklist shows strong inter-rater reliability in psychology settings. If scores differ sharply, the checklist or training protocol needs adjustment.

Psychological measurement frameworks emphasize:

- Clear operational definitions

- Structured scoring rubrics

- Rater training sessions

- Calibration exercises

- Trial scoring rounds

Researchers often pair these steps with structured quantitative checks such as correlation or agreement coefficients, similar to procedures used in Pearson correlation in SPSS when variables follow numeric scales.

When Researchers Use Inter-Rater Reliability

Researchers apply inter-rater reliability when human judgment enters the measurement process. Any time observer interpretation influences scores, agreement testing becomes necessary.

Common use cases include:

- Coding open-ended survey responses

- Rating classroom performance

- Scoring clinical interviews

- Evaluating recorded behaviors

- Classifying qualitative themes

- Reviewing document content

Large applied studies, including designs such as a cross sectional study, often include coder teams who label responses or cases. Agreement metrics protect those results from individual bias.

You should plan inter-rater reliability checks during the study design stage, not after data collection ends.

How Inter-Rater Reliability Works in Practice

Inter-rater reliability works through a structured scoring and comparison process.

Researchers first build a scoring framework with explicit category definitions. They then train raters using examples and scoring guides. Next, raters independently score the same subset of material. After scoring, the researcher compares ratings across raters using an agreement statistic.

A typical workflow follows these steps:

- Define rating categories and scoring rules

- Train raters with sample material

- Run a pilot scoring round

- Compare rater scores statistically

- Refine rules if agreement falls low

- Proceed with full scoring

This process creates measurable evidence that the scoring system performs consistently across people. Many teams integrate this checkpoint before advanced modeling steps such as those used in confirmatory factor analysis in SPSS, where measurement quality directly affects model fit.

How Researchers Measure Inter-Rater Reliability

Researchers measure inter-rater reliability using statistics that match the data type and rater structure. Different metrics serve different scoring formats.

For continuous ratings, researchers often compute:

- Intraclass correlation coefficient (ICC)

- Pearson correlation

For categorical ratings, researchers often compute:

- Cohen’s kappa

- Weighted kappa

- Fleiss’ kappa for more than two raters

Percent agreement alone does not provide enough rigor because it ignores agreement that occurs by chance. Agreement coefficients correct for chance and provide more defensible evidence.

Choice of metric should match:

- Number of raters

- Data scale type

- Category structure

- Weighting needs

Cohen’s Kappa Inter-Rater Reliability Explanation

Cohen’s kappa provides a chance-corrected agreement statistic for two raters who classify items into categories. Jacob Cohen introduced kappa in his 1960 paper on agreement measurement. The method gained wide adoption because it adjusts for agreement that random guessing would produce.

Cohen’s kappa compares:

- Observed agreement between raters

- Expected agreement by chance

The statistic then scales that comparison into a coefficient that ranges from −1 to 1.

General interpretation guidelines often follow this pattern:

- Above 0.80 indicates very strong agreement

- 0.60 to 0.79 indicates substantial agreement

- 0.40 to 0.59 indicates moderate agreement

- Below 0.40 indicates weak agreement

Researchers use weighted kappa when categories follow an ordered scale and disagreements differ in severity.

A Cohen’s kappa inter-rater reliability explanation should always include the category count, sample size, and weighting approach when used.

How to Calculate Kappa Inter-Rater Reliability

You can calculate kappa using a contingency table that cross-tabulates rater A and rater B decisions. Statistical software performs this calculation directly through cross-tabulation procedures with agreement statistics enabled.

The process requires:

- Two raters

- Matching category labels

- A rating for every item from each rater

The software computes observed agreement, chance agreement, and the final kappa coefficient. Analysts often run this step alongside other preprocessing checks in broader SPSS workflows such as those used in SPSS data analysis.

Reports should present the kappa value, confidence interval when available, and significance level.

How to Improve Inter-Rater Reliability

Research teams can improve inter-rater reliability through structured design and training choices.

Effective improvement strategies include:

- Tight category definitions

- Clear scoring anchors

- Detailed coding manuals

- Practice scoring rounds

- Group calibration sessions

- Disagreement review meetings

Rater drift can occur over time, so long projects benefit from periodic recalibration. Teams should also remove ambiguous categories that create repeated disagreement.

Reliability improves when raters understand both the construct and the scoring rules at a detailed level.

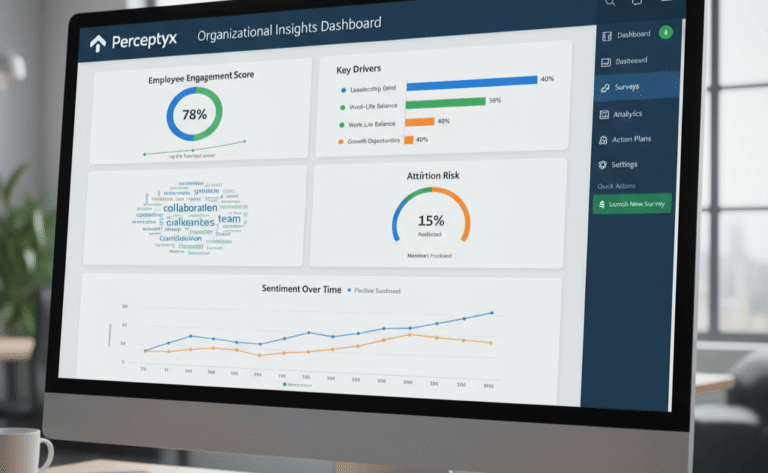

Why Inter-Rater Reliability Matters in Research

Inter-rater reliability protects measurement quality when human judgment influences scores. Strong agreement shows that results reflect the instrument and construct rather than individual rater preference. Weak agreement signals scoring ambiguity, poor training, or flawed category design.

High-quality studies treat rater agreement as a core measurement property, not an optional add-on. When raters score consistently, researchers can move forward with modeling, hypothesis testing, and interpretation with greater confidence.

Any project that converts observation or text into numbers should treat inter-rater reliability as a required validation step, not a cosmetic statistic.