Training programs are a major investment for any organization, but simply running a session is not enough. Leaders need evidence that employees are gaining the right skills, applying them on the job, and creating measurable improvements. This is where a training feedback survey becomes essential. Instead of relying on assumptions or informal comments, a structured survey captures expectations, learning outcomes, and application potential in a consistent way. A carefully designed instrument not only validates the effectiveness of your sessions but also highlights where adjustments are needed.

When you combine pre training surveys with post training feedback surveys, you build a before-and-after view that proves whether learning sticks. Add to that an analysis in SPSS, and you have hard data that can be presented with confidence to stakeholders. Whether you are running classroom courses, virtual training feedback surveys, or self-paced online learning, a solid evaluation process helps you continuously improve. This article offers a full guide so your training delivers results that last.

What This Evaluation Tool Is and Why It Matters

A training feedback survey is a structured questionnaire used to evaluate learning activities. It gathers views on content, delivery, relevance, and application on the job. Unlike informal comments, it produces consistent metrics that you can compare across cohorts and time.

Good evaluations do more than score a class. They check whether the training solved a real problem. They also tell you how to fix weak spots before the next run. You can tailor instruments for workshops, compliance modules, or multi‑week academies. Paper still works in some settings, but digital forms speed things up. An online training feedback survey collects responses in minutes and feeds a dashboard without manual entry. A virtual training feedback survey focuses on the remote experience—audio quality, platform stability, breakout room usefulness, and chat engagement.

Effective instruments connect to goals. If the aim is safer behavior, ask about confidence using the correct procedure and intent to apply it. If the aim is faster software handling, ask about task time and error rates. Pair those with open text prompts so people can explain barriers. Surveys also build trust. When participants see their input drive changes, they invest more in learning.

The Kirkpatrick model remains a popular structure for evaluating reactions, learning, behavior, and results. See the overview from CIPD

Designing Clear, Bias‑Free training feedback survey questions

Strong instruments start with strong wording. Bias creeps in when questions push a desired answer or when scales are unclear. Keep the reading level simple. Use one idea per sentence. Avoid double‑barreled prompts like “Was the trainer engaging and the content relevant?” Split that into two items.

Suggested training feedback survey questions:

- How relevant was this session to your role?

- How clear were the learning objectives?

- Rate the instructor’s pacing and clarity.

- How confident are you using the skill after this session?

- Which topics should be expanded or reduced?

- What will you apply in the next two weeks?

- What barriers could prevent you from applying what you learned?

Use a balanced Likert scale (e.g., 1–5 from “Strongly disagree” to “Strongly agree”). Make “Not applicable” available to avoid forced guesses. Randomize item order if you suspect priming. Add two open questions: one for wins, one for ideas. For privacy, state how responses will be used and who will see them.

Map every item to an objective. If you cannot link it, cut it. Keep the instrument short enough to respect time but long enough to inform action. Ten to fifteen items plus two opens is a sweet spot for most teams. Pilot the survey with five people from the target audience. Note any confusion and fix it before launch.

Pre training survey

A pre training survey sets the baseline. It captures knowledge, confidence, and expectations before instruction begins. This step stops you from guessing. It also lets you tailor the session to the room.

Focus the pre instrument on three areas:

- Starting point. Ask brief knowledge checks or confidence ratings on key tasks.

- Context. Capture role, tenure, and where people will apply the skill.

- Needs. Ask what skills they most want from the session and why.

Keep it short. Five to eight items is enough. Use the same scales you will use later so you can compare results. Tell participants that their answers guide content and will not affect performance reviews.

Pre data helps the facilitator adjust depth and examples. If most attendees are advanced, you can spend less time on basics. If confidence is low across the board, you can add practice time. The pre also reveals access issues for virtual classes: headset quality, bandwidth, or platform restrictions. Fixing those before day one raises completion and satisfaction. Finally, bank the pre scores. You will need them for the analysis steps later.

Post training feedback survey

A post training feedback survey measures reaction and early learning right after the session. Use the same core items from the pre so you can compare. Add targeted questions on delivery quality, practice time, and perceived usefulness.

Suggested areas:

- Confidence doing the target tasks now.

- Intention to apply the skill within a set time.

- Perceived relevance of scenarios and exercises.

- Trainer effectiveness and pace.

- Tech quality for virtual or hybrid delivery.

- Suggestions for the next run.

Time matters. Send the post survey within 24 hours, while details are fresh. Keep it mobile friendly so people can answer on the go. If you run a multi‑day course, send a short pulse at the end of each day and a full post after the last session. For behavior change, plan a follow‑up survey 30–60 days later. Ask whether people actually applied the skill, what helped, and what blocked progress. Pair that with manager inputs if possible.

Close the loop. Share a summary with participants. List two or three changes you will make from their input. This feedback loop lifts response rates over time and builds a culture that values learning evidence.

Pre and post training survey & how to analyze pre and post survey data

Align Measures for Clean Comparison

To compare change, the pre and post training survey must use identical scales and clear labels. Keep item wording the same across timepoints. Use unique IDs so each person’s pre and post can be paired.

Choose the Right Metrics

Track mean scores for each item. Track a composite score for the core objective (for example, the average of four confidence items). Add a pass/fail threshold if the program must certify learners.

Run the Basic Analysis

Here’s how to analyze pre and post survey data without overcomplication:

- Pair each respondent’s pre and post scores.

- Calculate differences (post minus pre).

- Examine average change and its distribution.

- Use a statistical test to check if the change is significant.

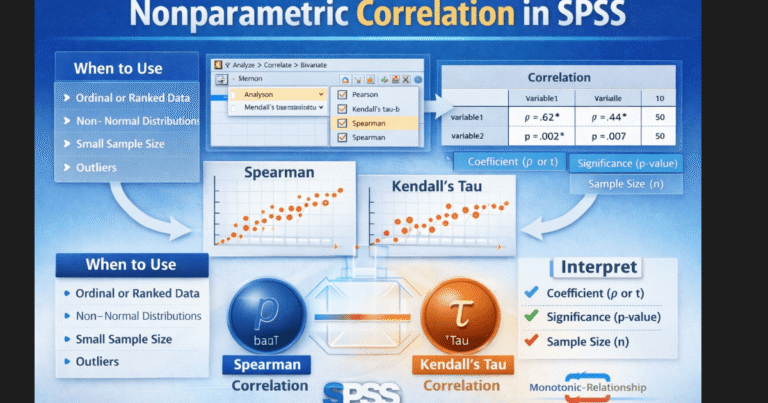

The most common test is the paired t‑test because it compares two related means. If your data are strongly non‑normal or you have outliers, you can use the Wilcoxon signed‑rank test instead. Visualize the change with a simple before/after bar chart or a slope plot per person. Charts make the story obvious for stakeholders.

Document Assumptions

Note attendance, time between measures, and any changes to content. These details matter when leaders interpret gains. Keep your script and instruments on file so future cohorts can be compared on the same footing.

A concise overview of learning evaluation and impact can be found at CIPD

How to analyze pre and post training survey in SPSS

Prepare the File

Export data from your form tool as CSV or XLSX. In SPSS, set variable types and value labels. Create clear names like conf_pre and conf_post. Check for duplicate IDs and remove unmatched records.

Screen the Data

Inspect missing values. If an item is missing on either timepoint, decide on a rule: exclude pairwise for that test or impute if justified. Check distributions with histograms. Note any extreme outliers and confirm they are real, not entry errors.

Build Scales

If you have multiple items for a construct (for example, four confidence items), compute a mean score at pre and at post. Use Reliability Analysis (Cronbach’s alpha) to check internal consistency. Document any items you drop and why.

Run Descriptives

Produce pre and post means, standard deviations, and counts. Add simple plots. These steps help you catch issues before you run inferential tests.

This streamlined workflow keeps your analysis reproducible. If you want expert support, our team provides help with SPSS analysis, including data cleaning, scale building, and clear reporting. Many teams also prefer to pay someone to do SPSS analysis during peak project periods so internal staff can focus on delivery.

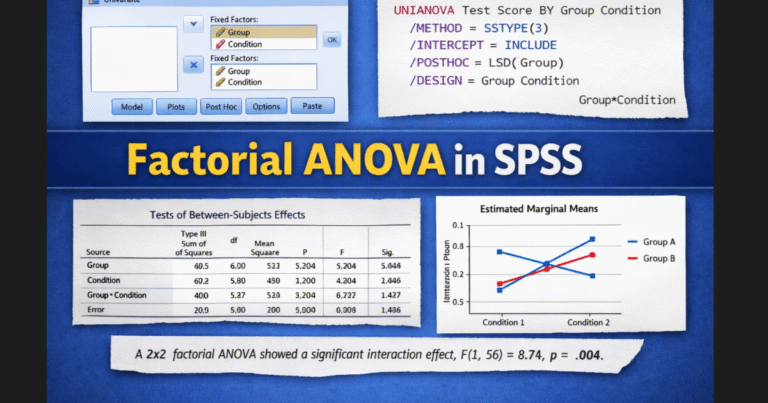

How to run a paired t test in spss for pre and post training survey

Run the Test

- Open Analyze → Compare Means → Paired‑Samples T Test.

- Move your pre variable to Variable1 and post variable to Variable2.

- Click OK to run the test.

- Repeat for each key item or for your composite scores.

Read the Output

SPSS produces three key tables:

- Paired Samples Statistics: shows means and standard deviations for pre and post.

- Paired Samples Correlations: shows the correlation between timepoints (useful context).

- Paired Samples Test: shows the mean difference, t statistic, degrees of freedom, and Sig. (2‑tailed)—that’s your p‑value.

Quality Checks

Confirm that each pair belongs to the same person. If the sign of the mean difference is negative, it means post < pre for that item. Consider whether you used reverse‑scored items; if so, reverse them before testing. If assumptions look shaky, compare results with a Wilcoxon signed‑rank test (Analyze → Nonparametric Tests).

Paired‑samples t‑test documentation for SPSS Statistics

If you’d rather have an analyst run this for you, we can handle setup, testing, and reporting within two business days: SPSS Help & Analysis.

How to interpret a paired t test in spss

Interpret the Numbers

Start with the mean difference. Positive values mean scores increased from pre to post. Check the p‑value. If p < .05, the change is statistically significant. Report the confidence interval for the mean difference so leaders see the likely range of improvement.

Add Effect Size

Significance alone does not tell the full story. Compute Cohen’s d for paired samples (mean difference divided by the standard deviation of the differences). As a rough guide, 0.2 is small, 0.5 is medium, and 0.8 is large. SPSS does not output d by default, but you can calculate it with a quick Compute step or in syntax.

Connect to Business Outcomes

Translate stats into action. Example: “Confidence using the new CRM rose by 1.1 points on a 5‑point scale (p < .001, d = .72).” Tie the result to adoption targets, error reduction, or safety goals. If the average moved but not enough people reached proficiency, plan coaching or a booster module.

Tell a Clear Story

Graphs help. Use side‑by‑side bars for pre and post with error bars, or a simple slope chart to show each learner’s change. Keep the deck short: one slide for goals, one for methods, one for results, and one for next steps. If you need a reviewer, our analysts can polish the narrative and visuals through SPSS Help & Analysis.

Interpreting t‑tests and effect sizes: University stats guides (e.g., UCLA IDRE) provide friendly walk‑throughs:

Digital, Virtual, and Hybrid Delivery Considerations

Remote learning changed evaluation. The tool is the same, but the context adds new friction points. For a virtual training feedback survey, include items about platform stability, audio, chat moderation, and the value of breakout rooms. Ask whether multitasking reduced focus and what would make remote sessions feel more interactive.

For an online training feedback survey that follows self‑paced content, focus on navigation, clarity of micro‑videos, and the usefulness of knowledge checks. Ask whether the LMS saved progress correctly and whether search worked. Include an item on accessibility: captions, transcript quality, or keyboard navigation.

Timing matters. For live sessions, share the link at the end while energy is high. For self‑paced courses, trigger the survey at completion and again after two weeks to see what stuck. Keep mobile users in mind. Use short pages, progress bars, and save‑and‑resume options. If your audience spans time zones, set a long enough window so everyone can respond without pressure.

Finally, collect optional contact details for follow‑up interviews. A quick call with five learners can explain patterns you see in the numbers and point to fixes that raise engagement next time.

Employee training feedback survey

Employee programs link directly to performance and retention. An employee training feedback survey should reflect that reality. Ask about relevance to role, support from managers, and how training aligns with current goals. Include an item on psychological safety: did people feel safe asking questions and making mistakes during practice?

Consider adding behavior‑focused prompts:

- What task will you do differently this week?

- What action will your manager see by Friday?

- What tool or resource do you still need?

Segment results by role or location to spot pockets that need extra support. Share a tidy summary with managers. Provide them with two coaching questions they can ask in one‑on‑ones to reinforce application. Track downstream KPIs where possible—fewer safety incidents, faster ticket handling, higher customer satisfaction. This link between survey scores and outcomes turns training into a business asset.

When bandwidth is tight, our team can run the full cycle—instrument design, distribution, and analysis—so HR and L&D can focus on enablement. Explore options or request a quote via SPSS Help & Analysis.

Reporting Results People Will Read

Great data can still be ignored if the report is dense. Keep it simple and actionable. Start with a one‑page executive summary. State the goal, sample size, and top three findings. Follow with a slide that shows change from pre to post for the main objective. Add one slide with strengths and one with fixes.

Use plain language: “Participants feel more confident using the incident form. Confidence rose from 2.8 to 3.9 out of 5.” Add a short list of recommended actions with owners and dates. Build a dashboard in your BI tool or Google Data Studio for ongoing cohorts. Automate data pulls from your form platform to cut manual work.

If your leaders want benchmarks, collect a rolling average across the last four cohorts and plot it. Show whether this group is above, on, or below trend. That simple context turns numbers into a story. For complex cases, we can create a tailored scorecard and automate updates as part of our SPSS analysis service.

Example with templates and ideas for training survey items

Common Pitfalls and How to Avoid Them

Several traps reduce the value of evaluations. The first is overlong surveys. Keep yours tight and focused on decisions you will make. The second is mismatched pre and post items. If scales or wording change, you cannot compare. The third is low response rates. Fix this by asking at the right moment, keeping it mobile friendly, and sharing back what changed.

Another trap is treating the survey as the only truth. Pair it with behavior metrics or manager observations when possible. Also avoid vanity metrics. High satisfaction is nice, but skill gain and application matter more. Finally, do not bury the analysis. Stakeholders need the bottom line. Lead with the change in the main outcome and what you will do next.

Teams also struggle with statistics. If SPSS feels heavy, start with descriptives and a simple paired t‑test. Document steps so others can reproduce your work. When projects stack up, it’s fine to pay someone to do SPSS analysis. Outsourcing the number‑crunching lets your experts focus on design, coaching, and rollout.

Conclusion

Training is only as valuable as the outcomes it produces. Without structured evaluation, it is impossible to know if learners gained confidence, changed behavior, or improved performance. That is why a training feedback survey is more than a formality—it is a decision-making tool. By setting a baseline with a pre training survey and comparing it with a post training feedback survey, you gain evidence of real impact. When analyzed with SPSS through a paired t-test, these results move from opinions to proof, and proof is what convinces decision-makers to continue or expand programs.

Equally important, the process empowers employees. When participants see their input lead to improvements, they feel valued and more engaged in learning. Digital and virtual surveys make it easy to collect feedback at scale, while advanced statistical techniques ensure your reporting is credible. If building and analyzing surveys feels complex, you can always lean on experts. At myspsshelp.com, we simplify SPSS analysis, data interpretation, and reporting so that your training story is clear, persuasive, and actionable.