Linear regression analysis is a statistical technique that allows us to examine the relationship between two continuous variables. It is used to find out how much one variable (the dependent variable) changes when another variable (the independent variable) changes. For example, we can use linear regression to understand whether exam performance can be predicted based on revision time, whether cigarette consumption can be predicted based on smoking duration, and so forth.

In this article, we will show you how to perform a simple linear regression analysis using SPSS, a popular software for data analysis. We will also explain how to interpret and report the results from this test. By the end of this post, you will be able to:

- Create a scatterplot to check the linearity and direction of the relationship between two variables

- Run a simple linear regression analysis using SPSS and obtain the output

- Evaluate the assumptions of linear regression and check for potential problems

- Interpret the coefficients, the R-squared value, and the significance test of the regression model

- Report the results of the linear regression analysis in APA style

Let’s get started!

Step 1: Define the research question and the variables

The first step of any data analysis is to define the research question and the variables that are involved. For this example, we will use a hypothetical dataset that contains the IQ scores and the job performance scores of 10 employees in a company. The main thing we want to figure out is: does IQ predict job performance? And if so, how?

The variable we want to predict is called the dependent variable (or sometimes, the outcome variable). In this case, it is the job performance score, which is measured on a scale from 0 to 150. The variable we are using to predict the dependent variable’s value is called the independent variable (or sometimes, the predictor variable). In this case, it is the IQ score, which is measured on a scale from 70 to 130.

Step 2: Create a scatterplot to check the linearity and direction of the relationship between two variables

A great starting point for our analysis is a scatterplot. This will tell us if the IQ and performance scores and their relation (if any) make any sense in the first place. We can create a scatterplot from Graphs > Legacy Dialogs > Scatter/Dot and follow the steps below:

- Select Simple Scatter as the type of scatterplot and click Define.

- Move the variable performance to the Y Axis box and the variable IQ to the X Axis box.

- Click Titles and enter a title and a subtitle for the scatterplot. For example, “Scatterplot of Performance with IQ” and “All Employees | N = 10”.

- Click OK.

The scatterplot shows that there seems to be a moderate positive correlation between IQ and performance: on average, employees with higher IQ scores tend to perform better. This relation looks roughly linear, which means that we can use a straight line to describe it. We can also add a regression line to our scatterplot to see how well it fits the data. To do this, we can right-click on the scatterplot and select Edit Content > In Separate Window. This will open a Chart Editor window, where we can click on the Add Fit Line at Total icon. By default, SPSS will add a linear regression line to our scatterplot.

The equation of the line is y = 34.26 + 0.64x, where y is the predicted performance score and x is the IQ score. This means that for every one-point increase in IQ, we expect the performance score to increase by 0.64 points. The R-squared value is 0.403, which indicates that IQ accounts for 40.3% of the variation in performance scores. That is, IQ predicts performance fairly well in this sample. However, a lot of information (such as statistical significance and confidence intervals) is still missing.

Step 3: Run a simple linear regression analysis using SPSS and obtain the output

To run a simple linear regression analysis using SPSS, we can use the Analyze > Regression > Linear menu. The steps are as follows:

- Move the variable performance to the Dependent box and the variable IQ to the Independent(s) box.

- Click Statistics and make sure that Estimates and Model fit are checked. You can also check Confidence intervals if you want to see the 95% confidence intervals for the coefficients and the R-squared value.

- Click OK.

The output will appear in a new window. It will consist of four tables: Variables Entered/Removed, Model Summary, ANOVA, and Coefficients. We will explain each table in the next step.

Step 4: Evaluate the assumptions of linear regression and check for potential problems

Before we interpret the results of the linear regression analysis, we need to check if the data meet the assumptions of linear regression. These are:

Assumption 1

The dependent variable should be measured at the continuous level (i.e., it is either an interval or ratio variable). Examples of continuous variables include revision time (measured in hours), intelligence (measured using IQ score), exam performance (measured from 0 to 100), weight (measured in kg), and so forth. You can learn more about interval and ratio variables in our article: [Types of Variable].

Assumption 2

The independent variable should also be measured at the continuous level (i.e., it is either an interval or ratio variable).

Assumption 3

There should be a linear relationship between the dependent and independent variables. This can be checked by inspecting the scatterplot, as we did in step 2. If the relationship is not linear, you may need to transform your data or use a different type of regression analysis.

Assumption 4

There should be no significant outliers, high leverage points, or highly influential points. Outliers are extreme values that are very different from the rest of the data. Leverage points are data points that have extreme values for the independent variable. Influential points are data points that have a large impact on the regression equation. These points can distort the results of the regression analysis and reduce the accuracy of the predictions. You can check for outliers, leverage points, and influential points by examining the standardized residuals, the leverage values, and the Cook’s distance values, respectively. These values can be obtained by clicking Save in the Linear Regression dialog and checking Standardized residuals, Leverage values, and Cook’s distance.

You can then use descriptive statistics and boxplots to identify any problematic points. You can also use the casewise diagnostics table, which lists the cases that have standardized residuals larger than 2.5 or smaller than -2.5. These cases are considered outliers because they deviate more than 2.5 standard deviations from the predicted value. You can access the casewise diagnostics table by clicking Options in the Linear Regression dialog and checking Casewise diagnostics. You may need to remove or modify any outliers, leverage points, or influential points, or use a robust method of regression that can handle them.

Assumption 5

There should be no multicollinearity, which occurs when the independent variables are too highly correlated with each other. Multicollinearity can affect the estimates of the coefficients and make them unreliable. Since we only have one independent variable in this example, we don’t need to worry about this assumption. However, if you have two or more independent variables, you can check for multicollinearity by examining the correlation matrix, the tolerance values, and the variance inflation factor (VIF) values. These values can be obtained by clicking Statistics in the Linear Regression dialog and checking Correlations and Collinearity diagnostics. You may need to remove or combine some of the independent variables, or use a different type of regression analysis, if multicollinearity is present.

Assumption 6

The residuals (the differences between the observed and predicted values) should be normally distributed. This can be checked by inspecting the histogram and the normal probability plot (P-P plot) of the standardized residuals. These plots can be obtained by clicking Plots in the Linear Regression dialog and checking Histogram and Normal probability plot. You can also use the Kolmogorov-Smirnov test or the Shapiro-Wilk test to test the normality of the residuals. These tests can be obtained by clicking Options in the Linear Regression dialog and checking Normality tests. If the residuals are not normally distributed, you may need to transform your data or use a different type of regression analysis.

Assumption 7

The residuals should have a constant variance (homoscedasticity). This means that the spread of the residuals should be similar for all values of the independent variable. This can be checked by inspecting the scatterplot of the standardized residuals versus the standardized predicted values. This plot can be obtained by clicking Plots in the Linear Regression dialog and checking Standardized residuals versus standardized predicted values. You can also use the Breusch-Pagan test or the White test to test the homoscedasticity of the residuals. These tests can be obtained by clicking Options in the Linear Regression dialog and checking Homoscedasticity tests. If the residuals have a non-constant variance (heteroscedasticity), you may need to transform your data or use a different type of regression analysis.

Step 5: Interpret Linear Regression Output in SPSS

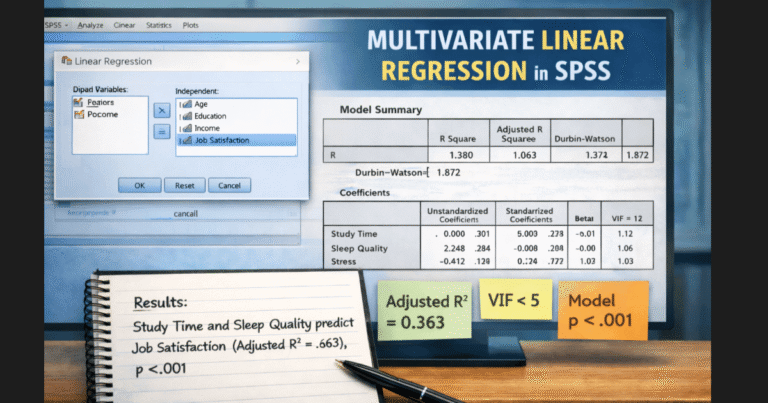

Once the assumptions have been checked and satisfied, the next step is to interpret the results in the SPSS output. The main information we need comes from the Model Summary table, the ANOVA table, and the Coefficients table.

The Model Summary table provides the R, R-squared, and Std. Error of the Estimate.

- R shows the strength of the relationship between IQ and performance.

- R-squared tells us how much of the variation in the dependent variable is explained by the independent variable. In our example, IQ explains 40.3 percent of the variation in job performance. That means linear regression in SPSS suggests intelligence is a meaningful predictor of performance in this sample.

The ANOVA table tells us whether the regression model is statistically significant overall. If the p-value is less than .05, we conclude that the model significantly predicts the outcome.

The Coefficients table is where the main story is told:

- The unstandardized coefficients (B) allow us to write the regression equation.

- The significance value (Sig.) shows whether IQ is a statistically significant predictor.

- The confidence intervals help confirm the precision of our estimates.

If IQ has a significant p-value (p < .05), then we conclude that it reliably predicts performance. At this stage, we have enough statistical evidence to answer our research question.

Step 6: Reporting a Linear Regression Test in APA Style

After analyzing your model, the final step is to present your findings in a clear and standardized format. The APA reporting style is commonly used in dissertations and research papers requiring SPSS analysis.

A correct APA report for linear regression in SPSS includes:

- A brief description of the variables

- The regression equation

- R-squared and significance levels

- Interpretation of the results

Example APA write-up

A simple linear regression was conducted to determine whether IQ predicts job performance. The model was statistically significant, F(1, 8) = 5.40, p = .049, and explained 40.3% of the variance in performance scores (R² = .403). IQ significantly predicted performance (B = 0.64, p = .049), indicating that higher IQ scores are associated with higher job performance. The regression equation was: Predicted Performance = 34.26 + 0.64(IQ).

Including a scatterplot with a fitted regression line helps strengthen your reporting and visualization.

Confidently Run Linear Regression in SPSS with Expert Support

Linear regression in SPSS is a powerful technique for answering predictive research questions. When examining academic performance, workplace outcomes, customer behavior or any other continuous relationship, SPSS gives you the tools to test your hypotheses. However, challenges such as assumption violations, outliers, and complex reporting requirements can slow down your entire dissertation timeline.

You don’t need to struggle through SPSS alone. We provide end-to-end statistical support so you can move forward with confidence.

Send us your dataset and research questions. We will handle the regression analysis for you.